AI Media and Misinformation Content Analysis Tool

Project description

AMMICO - AI Media and Misinformation Content Analysis Tool

This package extracts data from images such as social media posts that contain an image part and a text part. The analysis can generate a very large number of features, depending on the user input. See our paper for a more in-depth description.

This project is currently under development!

Use pre-processed image files such as social media posts with comments and process to collect information:

- Text extraction from the images

- Language detection

- Translation into English or other languages

- Cleaning of the text, spell-check

- Sentiment analysis

- Named entity recognition

- Topic analysis

- Content extraction from the images

- Textual summary of the image content ("image caption") that can be analyzed further using the above tools

- Feature extraction from the images: User inputs query and images are matched to that query (both text and image query)

- Question answering

- Performing person and face recognition in images

- Face mask detection

- Age, gender and race detection

- Emotion recognition

- Color analysis

- Analyse hue and percentage of color on image

- Multimodal analysis

- Find best matches for image content or image similarity

- Cropping images to remove comments from posts

Installation

The AMMICO package can be installed using pip:

pip install ammico

This will install the package and its dependencies locally. If after installation you get some errors when running some modules, please follow the instructions below.

Compatibility problems solving

Some ammico components require tensorflow (e.g. Emotion detector), some pytorch (e.g. Summary detector). Sometimes there are compatibility problems between these two frameworks. To avoid these problems on your machines, you can prepare proper environment before installing the package (you need conda on your machine):

1. First, install tensorflow (https://www.tensorflow.org/install/pip)

-

create a new environment with python and activate it

conda create -n ammico_env python=3.10conda activate ammico_env -

install cudatoolkit from conda-forge

conda install -c conda-forge cudatoolkit=11.8.0 -

install nvidia-cudnn-cu11 from pip

python -m pip install nvidia-cudnn-cu11==8.6.0.163 -

add script that runs when conda environment

ammico_envis activated to put the right libraries on your LD_LIBRARY_PATHmkdir -p $CONDA_PREFIX/etc/conda/activate.d echo 'CUDNN_PATH=$(dirname $(python -c "import nvidia.cudnn;print(nvidia.cudnn.__file__)"))' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh echo 'export LD_LIBRARY_PATH=$CUDNN_PATH/lib:$CONDA_PREFIX/lib/:$LD_LIBRARY_PATH' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh source $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh -

deactivate and re-activate conda environment to call script above

conda deactivateconda activate ammico_env -

install tensorflow

python -m pip install tensorflow==2.12.1

2. Second, install pytorch

-

install pytorch for same cuda version as above

python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

3. After we prepared right environment we can install the ammico package

python -m pip install ammico

It is done.

Micromamba

If you are using micromamba you can prepare environment with just one command:

micromamba create --no-channel-priority -c nvidia -c pytorch -c conda-forge -n ammico_env "python=3.10" pytorch torchvision torchaudio pytorch-cuda "tensorflow-gpu<=2.12.3" "numpy<=1.23.4"

Windows

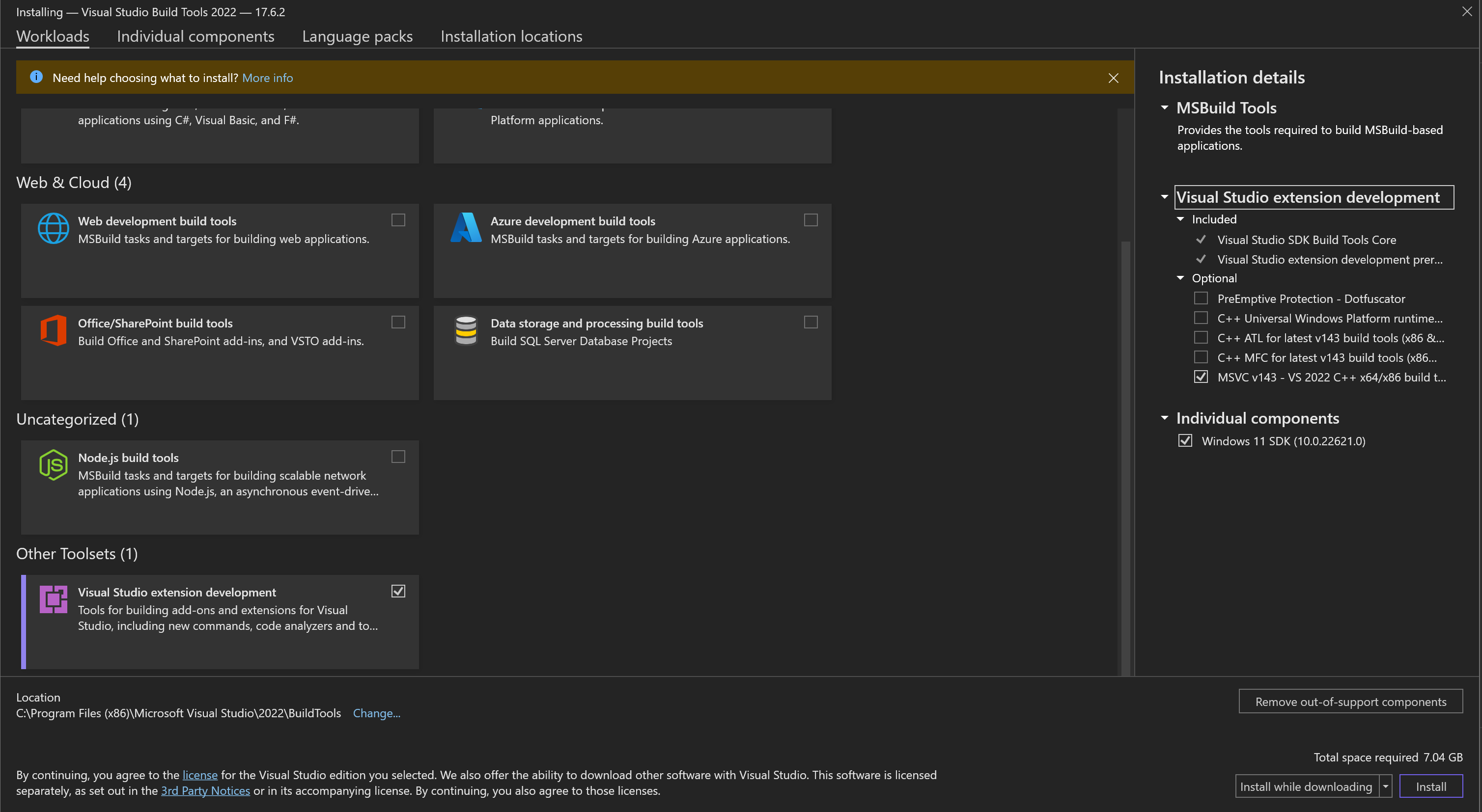

To make pycocotools work on Windows OS you may need to install vs_BuildTools.exe from https://visualstudio.microsoft.com/visual-cpp-build-tools/ and choose following elements:

Visual Studio extension developmentMSVC v143 - VS 2022 C++ x64/x86 build toolsWindows 11 SDKfor Windows 11 (orWindows 10 SDKfor Windows 10)

Be careful, it requires around 7 GB of disk space.

Usage

The main demonstration notebook can be found in the notebooks folder and also on google colab.

There are further sample notebooks in the notebooks folder for the more experimental features:

- Topic analysis: Use the notebook

get-text-from-image.ipynbto analyse the topics of the extraced text.

You can run this notebook on google colab: Here

Place the data files and google cloud vision API key in your google drive to access the data. - To crop social media posts use the

cropposts.ipynbnotebook. You can run this notebook on google colab: Here

Features

Text extraction

The text is extracted from the images using google-cloud-vision. For this, you need an API key. Set up your google account following the instructions on the google Vision AI website or as described here. You then need to export the location of the API key as an environment variable:

export GOOGLE_APPLICATION_CREDENTIALS="location of your .json"

The extracted text is then stored under the text key (column when exporting a csv).

Googletrans is used to recognize the language automatically and translate into English. The text language and translated text is then stored under the text_language and text_english key (column when exporting a csv).

If you further want to analyse the text, you have to set the analyse_text keyword to True. In doing so, the text is then processed using spacy (tokenized, part-of-speech, lemma, ...). The English text is cleaned from numbers and unrecognized words (text_clean), spelling of the English text is corrected (text_english_correct), and further sentiment and subjectivity analysis are carried out (polarity, subjectivity). The latter two steps are carried out using TextBlob. For more information on the sentiment analysis using TextBlob see here.

The Hugging Face transformers library is used to perform another sentiment analysis, a text summary, and named entity recognition, using the transformers pipeline.

Content extraction

The image content ("caption") is extracted using the LAVIS library. This library enables vision intelligence extraction using several state-of-the-art models, depending on the task. Further, it allows feature extraction from the images, where users can input textual and image queries, and the images in the database are matched to that query (multimodal search). Another option is question answering, where the user inputs a text question and the library finds the images that match the query.

Emotion recognition

Emotion recognition is carried out using the deepface and retinaface libraries. These libraries detect the presence of faces, and their age, gender, emotion and race based on several state-of-the-art models. It is also detected if the person is wearing a face mask - if they are, then no further detection is carried out as the mask prevents an accurate prediction.

Color/hue detection

Color detection is carried out using colorgram.py and colour for the distance metric. The colors can be classified into the main named colors/hues in the English language, that are red, green, blue, yellow, cyan, orange, purple, pink, brown, grey, white, black.

Cropping of posts

Social media posts can automatically be cropped to remove further comments on the page and restrict the textual content to the first comment only.

FAQ

What happens to the images that are sent to google Cloud Vision?

According to the google Vision API, the images that are uploaded and analysed are not stored and not shared with third parties:

We won't make the content that you send available to the public. We won't share the content with any third party. The content is only used by Google as necessary to provide the Vision API service. Vision API complies with the Cloud Data Processing Addendum.

For online (immediate response) operations (

BatchAnnotateImagesandBatchAnnotateFiles), the image data is processed in memory and not persisted to disk. For asynchronous offline batch operations (AsyncBatchAnnotateImagesandAsyncBatchAnnotateFiles), we must store that image for a short period of time in order to perform the analysis and return the results to you. The stored image is typically deleted right after the processing is done, with a failsafe Time to live (TTL) of a few hours. Google also temporarily logs some metadata about your Vision API requests (such as the time the request was received and the size of the request) to improve our service and combat abuse.

What happens to the text that is sent to google Translate?

According to google Translate, the data is not stored after processing and not made available to third parties:

We will not make the content of the text that you send available to the public. We will not share the content with any third party. The content of the text is only used by Google as necessary to provide the Cloud Translation API service. Cloud Translation API complies with the Cloud Data Processing Addendum.

When you send text to Cloud Translation API, text is held briefly in-memory in order to perform the translation and return the results to you.

What happens if I don't have internet access - can I still use ammico?

Some features of ammico require internet access; a general answer to this question is not possible, some services require an internet connection, others can be used offline:

- Text extraction: To extract text from images, and translate the text, the data needs to be processed by google Cloud Vision and google Translate, which run in the cloud. Without internet access, text extraction and translation is not possible.

- Image summary and query: After an initial download of the models, the

summarymodule does not require an internet connection. - Facial expressions: After an initial download of the models, the

facesmodule does not require an internet connection. - Multimodal search: After an initial download of the models, the

multimodal_searchmodule does not require an internet connection. - Color analysis: The

colormodule does not require an internet connection.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file ammico-0.2.1.tar.gz.

File metadata

- Download URL: ammico-0.2.1.tar.gz

- Upload date:

- Size: 1.5 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.0.0 CPython/3.12.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 9456adabe9fa3de5d71355e3c65e533f791ed68075d21e2653bee3f4959c2df1 |

|

| MD5 | 82ec758b770fbb146b05cfeeac9155fa |

|

| BLAKE2b-256 | 623fbeee2e933e4c3ee3cda8d4408d3d91239b9be896759f79cc37c2479869aa |

File details

Details for the file ammico-0.2.1-py3-none-any.whl.

File metadata

- Download URL: ammico-0.2.1-py3-none-any.whl

- Upload date:

- Size: 1.5 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.0.0 CPython/3.12.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ed0c6f71500a6d988100df41adbce8aa734cf3bc06845a91558d8d276353085f |

|

| MD5 | d02a51364dde69fb1b7583a5a162f4fe |

|

| BLAKE2b-256 | ad6389c83f4aa27db351310c15a945e42b835f603c0f1d99d564c56229ef9b70 |