This repository contains an easy and intuitive approach to few-shot NER using most similar expansion over spaCy embeddings. Now with entity confidence scores!

Project description

Concise Concepts

When wanting to apply NER to concise concepts, it is really easy to come up with examples, but pretty difficult to train an entire pipeline. Concise Concepts uses few-shot NER based on word embedding similarity to get you going with easy! Now with entity scoring!

Install

pip install concise-concepts

Quickstart

import spacy

from spacy import displacy

import concise_concepts

data = {

"fruit": ["apple", "pear", "orange"],

"vegetable": ["broccoli", "spinach", "tomato"],

"meat": ["beef", "pork", "fish", "lamb"]

}

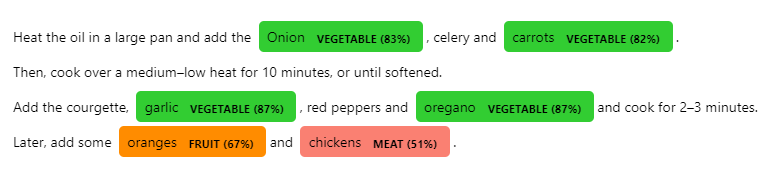

text = """

Heat the oil in a large pan and add the Onion, celery and carrots.

Then, cook over a medium–low heat for 10 minutes, or until softened.

Add the courgette, garlic, red peppers and oregano and cook for 2–3 minutes.

Later, add some oranges and chickens. """

nlp = spacy.load("en_core_web_lg", disable=["ner"])

# ent_score for entity condifence scoring

nlp.add_pipe("concise_concepts", config={"data": data, "ent_score": True})

doc = nlp(text)

options = {"colors": {"fruit": "darkorange", "vegetable": "limegreen", "meat": "salmon"},

"ents": ["fruit", "vegetable", "meat"]}

ents = doc.ents

for ent in ents:

new_label = f"{ent.label_} ({float(ent._.ent_score):.0%})"

options["colors"][new_label] = options["colors"].get(ent.label_.lower(), None)

options["ents"].append(new_label)

ent.label_ = new_label

doc.ents = ents

displacy.render(doc, style="ent", options=options)

use specific number of words to expand over

data = {

"fruit": ["apple", "pear", "orange"],

"vegetable": ["broccoli", "spinach", "tomato"],

"meat": ["beef", "pork", "fish", "lamb"]

}

topn = [50, 50, 150]

assert len(topn) == len

nlp.add_pipe("concise_concepts", config={"data": data, "topn": topn})

use word similarity to score entities

import spacy

import concise_concepts

data = {

"ORG": ["Google", "Apple", "Amazon"],

"GPE": ["Netherlands", "France", "China"],

}

text = """Sony was founded in Japan."""

nlp = spacy.load("en_core_web_lg")

nlp.add_pipe("concise_concepts", config={"data": data, "ent_score": True})

doc = nlp(text)

print([(ent.text, ent.label_, ent._.ent_score) for ent in doc.ents])

# output

#

# [('Sony', 'ORG', 0.63740385), ('Japan', 'GPE', 0.5896993)]

use gensim.word2vec model from pre-trained gensim or custom model path

data = {

"fruit": ["apple", "pear", "orange"],

"vegetable": ["broccoli", "spinach", "tomato"],

"meat": ["beef", "pork", "fish", "lamb"]

}

# model from https://radimrehurek.com/gensim/downloader.html or path to local file

model_path = "glove-twitter-25"

nlp.add_pipe("concise_concepts", config={"data": data, "model_path": model_path})

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file concise-concepts-0.4.1.tar.gz.

File metadata

- Download URL: concise-concepts-0.4.1.tar.gz

- Upload date:

- Size: 6.6 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.1.11 CPython/3.8.2 Windows/10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 33ecb986e636740bcf79d5f523058b71009be7ed76e4a99e1dc0aab40617226d |

|

| MD5 | 3c5885a1dc846f2d8b6760e51decb0e1 |

|

| BLAKE2b-256 | cfb93fb3524a520aa1dbd81d8d0b933c9e76e42ef6ed45eb95a687349e125e23 |

File details

Details for the file concise_concepts-0.4.1-py3-none-any.whl.

File metadata

- Download URL: concise_concepts-0.4.1-py3-none-any.whl

- Upload date:

- Size: 8.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.1.11 CPython/3.8.2 Windows/10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 70a8ee58f59c668b02110b3c756819e55776847fabb4589bc14e47c26e89d8a9 |

|

| MD5 | 05d5a504e267508e6f33905aecea1566 |

|

| BLAKE2b-256 | 185d2529faba69596a18fadac5e5ccd738fe548eedbf7d4af1ad58839379a01a |