DeepLC: Retention time prediction for (modified) peptides using Deep Learning.

Project description

DeepLC: Retention time prediction for (modified) peptides using Deep Learning.

Introduction

DeepLC is a retention time predictor for (modified) peptides that employs Deep Learning. Its strength lies in the fact that it can accurately predict retention times for modified peptides, even if hasn't seen said modification during training.

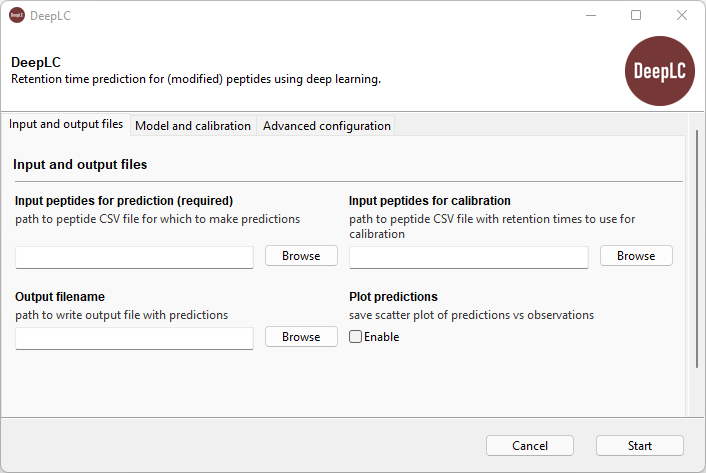

DeepLC can be used through the web application, locally with a graphical user interface (GUI), or as a Python package. In the latter case, DeepLC can be used from the command line, or as a Python module.

Citation

If you use DeepLC for your research, please use the following citation:

DeepLC can predict retention times for peptides that carry as-yet unseen modifications

Robbin Bouwmeester, Ralf Gabriels, Niels Hulstaert, Lennart Martens & Sven Degroeve

Nature Methods 18, 1363–1369 (2021) doi: 10.1038/s41592-021-01301-5

Usage

Web application

Just go to iomics.ugent.be/deeplc and get started!

Graphical user interface

In an existing Python environment (cross-platform)

- In your terminal with Python (>=3.7) installed, run

pip install deeplc[gui] - Start the GUI with the command

deeplc-guiorpython -m deeplc.gui

Standalone installer (Windows)

- Download the DeepLC installer (

DeepLC-...-Windows-64bit.exe) from the latest release - Execute the installer

- If Windows Smartscreen shows a popup window with "Windows protected your PC", click on "More info" and then on "Run anyway". You will have to trust us that DeepLC does not contain any viruses, or you can check the source code 😉

- Go through the installation steps

- Start DeepLC!

Python package

Installation

Install with conda, using the bioconda and conda-forge channels:

conda install -c bioconda -c conda-forge deeplc

Or install with pip:

pip install deeplc

Command line interface

To use the DeepLC CLI, run:

deeplc --file_pred <path/to/peptide_file.csv>

We highly recommend to add a peptide file with known retention times for calibration:

deeplc --file_pred <path/to/peptide_file.csv> --file_cal <path/to/peptide_file_with_tr.csv>

For an overview of all CLI arguments, run deeplc --help.

Python module

Minimal example:

import pandas as pd

from deeplc import DeepLC

peptide_file = "datasets/test_pred.csv"

calibration_file = "datasets/test_train.csv"

pep_df = pd.read_csv(peptide_file, sep=",")

pep_df['modifications'] = pep_df['modifications'].fillna("")

cal_df = pd.read_csv(calibration_file, sep=",")

cal_df['modifications'] = cal_df['modifications'].fillna("")

dlc = DeepLC()

dlc.calibrate_preds(seq_df=cal_df)

preds = dlc.make_preds(seq_df=pep_df)

For a more elaborate example, see examples/deeplc_example.py .

Input files

DeepLC expects comma-separated values (CSV) with the following columns:

seq: unmodified peptide sequencesmodifications: MS2PIP-style formatted modifications: Every modification is listed aslocation|name, separated by a pipe (|) between the location, the name, and other modifications.locationis an integer counted starting at 1 for the first AA. 0 is reserved for N-terminal modifications, -1 for C-terminal modifications.namehas to correspond to a Unimod (PSI-MS) name.tr: retention time (only required for calibration)

For example:

seq,modifications,tr

AAGPSLSHTSGGTQSK,,12.1645

AAINQKLIETGER,6|Acetyl,34.095

AANDAGYFNDEMAPIEVKTK,12|Oxidation|18|Acetyl,37.3765

See examples/datasets for more examples.

Prediction models

DeepLC comes with multiple CNN models trained on data from various experimental settings. By default, DeepLC selects the best model based on the calibration dataset. If no calibration is performed, the first default model is selected. Always keep note of the used models and the DeepLC version. The current version comes with:

| Model filename | Experimental settings | Publication |

|---|---|---|

| full_hc_PXD005573_mcp_8c22d89667368f2f02ad996469ba157e.hdf5 | Reverse phase | Bruderer et al. 2017 |

| full_hc_PXD005573_mcp_1fd8363d9af9dcad3be7553c39396960.hdf5 | Reverse phase | Bruderer et al. 2017 |

| full_hc_PXD005573_mcp_cb975cfdd4105f97efa0b3afffe075cc.hdf5 | Reverse phase | Bruderer et al. 2017 |

For all the full models that can be used in DeepLC (including some TMT models!) please see:

https://github.com/RobbinBouwmeester/DeepLCModels

Naming convention for the models is as follows:

[full_hc]_[dataset]_[fixed_mods]_[hash].hdf5

The different parts refer to:

full_hc - flag to indicated a finished, trained, and fully optimized model

dataset - name of the dataset used to fit the model (see the original publication, supplementary table 2)

fixed mods - flag to indicate fixed modifications were added to peptides without explicit indication (e.g., carbamidomethyl of cysteine)

hash - indicates different architectures, where "1fd8363d9af9dcad3be7553c39396960" indicates CNN filter lengths of 8, "cb975cfdd4105f97efa0b3afffe075cc" indicates CNN filter lengths of 4, and "8c22d89667368f2f02ad996469ba157e" indicates filter lengths of 2

Q&A

Q: Is it required to indicate fixed modifications in the input file?

Yes, even modifications like carbamidomethyl should be in the input file.

Q: So DeepLC is able to predict the retention time for any modification?

Yes, DeepLC can predict the retention time of any modification. However, if the modification is very different from the peptides the model has seen during training the accuracy might not be satisfactory for you. For example, if the model has never seen a phosphor atom before, the accuracy of the prediction is going to be low.

Q: Installation fails. Why?

Please make sure to install DeepLC in a path that does not contain spaces. Run the latest LTS version of Ubuntu or Windows 10. Make sure you have enough disk space available, surprisingly TensorFlow needs quite a bit of disk space. If you are still not able to install DeepLC, please feel free to contact us:

Robbin.Bouwmeester@ugent.be and Ralf.Gabriels@ugent.be

Q: I have a special usecase that is not supported. Can you help?

Ofcourse, please feel free to contact us:

Robbin.Bouwmeester@ugent.be and Ralf.Gabriels@ugent.be

Q: DeepLC runs out of memory. What can I do?

You can try to reduce the batch size. DeepLC should be able to run if the batch size is low enough, even on machines with only 4 GB of RAM.

Q: I have a graphics card, but DeepLC is not using the GPU. Why?

For now DeepLC defaults to the CPU instead of the GPU. Clearly, because you want to use the GPU, you are a power user :-). If you want to make the most of that expensive GPU, you need to change or remove the following line (at the top) in deeplc.py:

# Set to force CPU calculations

os.environ['CUDA_VISIBLE_DEVICES'] = '-1'

Also change the same line in the function reset_keras():

# Set to force CPU calculations

os.environ['CUDA_VISIBLE_DEVICES'] = '-1'

Either remove the line or change to (where the number indicates the number of GPUs):

# Set to force CPU calculations

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

Q: What modification name should I use?

The names from unimod are used. The PSI-MS name is used by default, but the Interim name is used as a fall-back if the PSI-MS name is not available. Please also see unimod_to_formula.csv in the folder unimod/ for the naming of specific modifications.

Q: I have a modification that is not in unimod. How can I add the modification?

In the folder unimod/ there is the file unimod_to_formula.csv that can be used to add modifications. In the CSV file add a name (that is unique and not present yet) and the change in atomic composition. For example:

Met->Hse,O,H(-2) C(-1) S(-1)

Make sure to use negative signs for the atoms subtracted.

Q: Help, all my predictions are between [0,10]. Why?

It is likely you did not use calibration. No problem, but the retention times for training purposes were normalized between [0,10]. This means that you probably need to adjust the retention time yourselve after analysis or use a calibration set as the input.

Q: What does the option dict_divider do?

This parameter defines the precision to use for fast-lookup of retention times for calibration. A value of 10 means a precision of 0.1 (and 100 a precision of 0.01) between the calibration anchor points. This parameter does not influence the precision of the calibration, but setting it too high might mean that there is bad selection of the models between anchor points. A safe value is usually higher than 10.

Q: What does the option split_cal do?

The option split_cal, or split calibration, sets number of divisions of the

chromatogram for piecewise linear calibration. If the value is set to 10 the

chromatogram is split up into 10 equidistant parts. For each part the median

value of the calibration peptides is selected. These are the anchor points.

Between each anchor point a linear fit is made. This option has no effect when

the pyGAM generalized additive models are used for calibration.

Q: How does the ensemble part of DeepLC work?

Models within the same directory are grouped if they overlap in their name. The overlap has to be in their full name, except for the last part of the name after a "_"-character.

The following models will be grouped:

full_hc_dia_fixed_mods_a.hdf5

full_hc_dia_fixed_mods_b.hdf5

None of the following models will not be grouped:

full_hc_dia_fixed_mods2_a.hdf5

full_hc_dia_fixed_mods_b.hdf5

full_hc_dia_fixed_mods_2_b.hdf5

Q: I would like to take the ensemble average of multiple models, even if they are trained on different datasets. How can I do this?

Feel free to experiment! Models within the same directory are grouped if they overlap in their name. The overlap has to be in their full name, except for the last part of the name after a "_"-character.

The following models will be grouped:

model_dataset1.hdf5

model_dataset2.hdf5

So you just need to rename your models.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file deeplc-3.1.2.tar.gz.

File metadata

- Download URL: deeplc-3.1.2.tar.gz

- Upload date:

- Size: 33.1 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.1 CPython/3.12.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | b2b6b9a83c3ebac7dd1af4340078896c9506ce31d3bd92740c49a617104af4be |

|

| MD5 | a32f4ac7a2eec9b672eb1b117d8595e5 |

|

| BLAKE2b-256 | 68c1a752a4a8488a6c1dd8293bad6b2107bc44aa6a76785ad0db92bcb5c3bf2a |

Provenance

The following attestation bundles were made for deeplc-3.1.2.tar.gz:

Publisher:

publish.yml on compomics/DeepLC

-

Statement type:

https://in-toto.io/Statement/v1- Predicate type:

https://docs.pypi.org/attestations/publish/v1 - Subject name:

deeplc-3.1.2.tar.gz - Subject digest:

b2b6b9a83c3ebac7dd1af4340078896c9506ce31d3bd92740c49a617104af4be - Sigstore transparency entry: 150495902

- Sigstore integration time:

- Predicate type:

File details

Details for the file deeplc-3.1.2-py3-none-any.whl.

File metadata

- Download URL: deeplc-3.1.2-py3-none-any.whl

- Upload date:

- Size: 33.1 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.1 CPython/3.12.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 312427815fb226192d5378c84c206d3a3d0c2ad6d3debd1e2cc9d730d980446d |

|

| MD5 | 0bc8b5584947619f1bad5f37fd7fe7dc |

|

| BLAKE2b-256 | deefd3e636ad375d157868d19722c3abdf19927be492054778e8e1f0d1301cf8 |

Provenance

The following attestation bundles were made for deeplc-3.1.2-py3-none-any.whl:

Publisher:

publish.yml on compomics/DeepLC

-

Statement type:

https://in-toto.io/Statement/v1- Predicate type:

https://docs.pypi.org/attestations/publish/v1 - Subject name:

deeplc-3.1.2-py3-none-any.whl - Subject digest:

312427815fb226192d5378c84c206d3a3d0c2ad6d3debd1e2cc9d730d980446d - Sigstore transparency entry: 150495904

- Sigstore integration time:

- Predicate type: