Deep Continuous Quantile Regression

Project description

Deep Continuous Quantile Regression

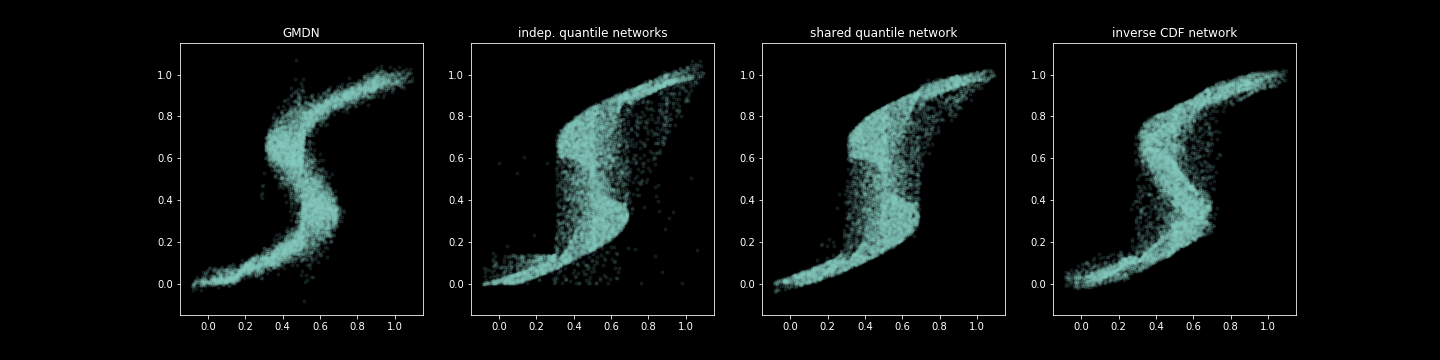

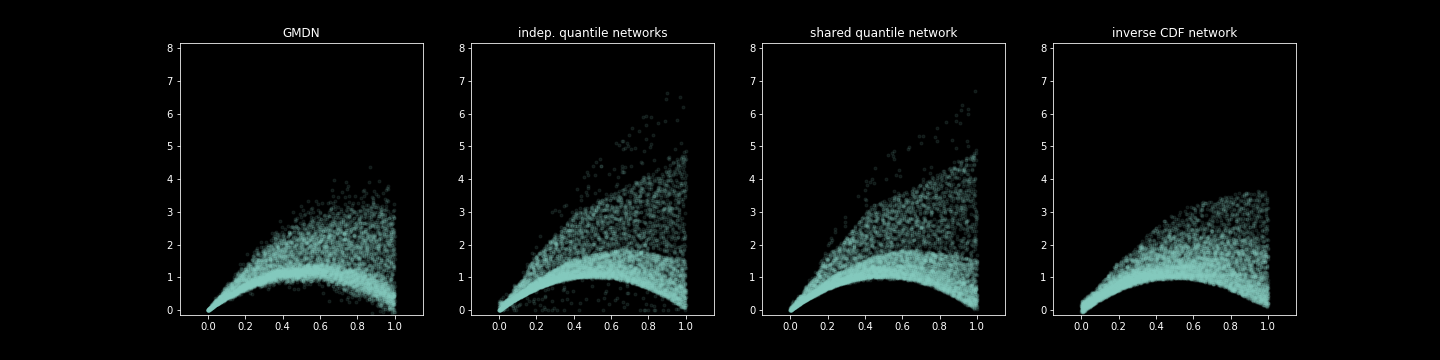

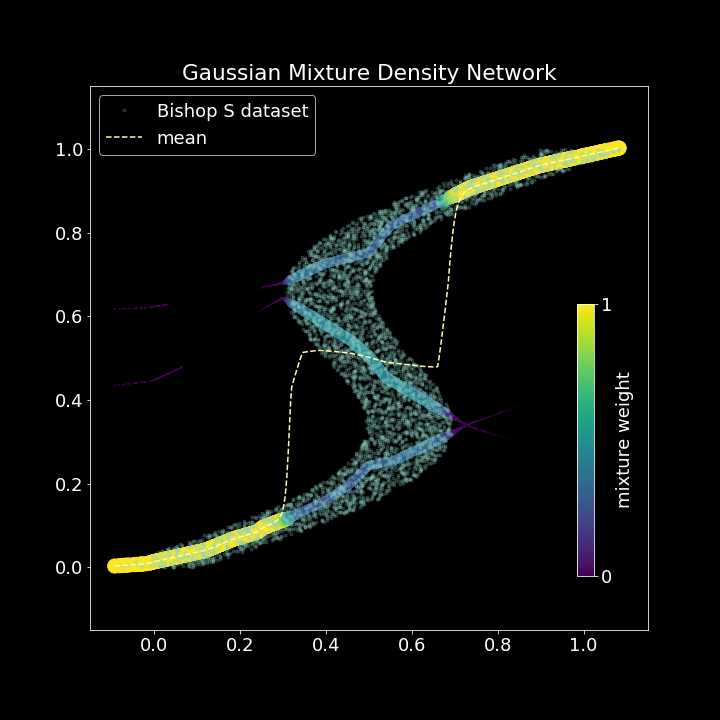

This package explores different approaches to learning the uncertainty, and, more generally, the conditional distribution of the target variable. We introduce a new type of network, the "Deep Continuous Quantile Regression Network", that approximates the inverse conditional CDF directly by a mult-layer perceptron, instead of relying on variational methods which require priors on the functional form of the distribution. In many cases we find that it presents a robust alternative to well-known Mixture Density Networks`.

This is particularily important when

- the mean of the target variable is not sufficient for the use case

- the errors are heteroscedastic, i.e. vary depending on input features

- the errors are skewed, making a single summary statistic such as variance inadequate.

We explore two main approches:

- fitting a mixture density model

- learning the location of conditional qunatiles,

q, of the distribution.

Our mixture density network exploits an implementation trick to achieve negative-log-likelihood minimisation in keras.

Same trick is useed to optimize the "pinball" loss in quantile regression networks, and in fact can be used to optimize an arbitrary loss function of (X, y, y_hat).

Within the quantile-based approach, we further explore:

a. fitting a separate model to predict each quantile

b. fitting a multi-output network to predict multiple quantiles simultaneously

c. learning a regression on X and q simultanesously, thus effectively

learning the complete (conditional) cumulative density function.

Installation

Install package from source:

pip install git+https://github.com/ig248/deepquantiles

Or from PyPi:

pip install deepquantiles

Usage

from deepquantiles import MultiQuantileRegressor, InverseCDFRegressor, MixtureDensityRegressor

As this package is largely an experiment, please explore the Jupyter notebooks and expect to look at the source code.

Content

deepqunatiles.regressors: implementation of core algorithmsdeepquantiles.presets: a collection of pre-configured estimators and settings used in experimentsdeepquantiles.datasets: functions used for generating test datadeepquantiles.nb_utils: helper functions used in notebooksnotebooks: Jupyter notebooks with examples and experiments

Tests

Run

make dev-install

make lint

make test

References

Mixture Density Networks, Christopher M. Bishop, NCRG/94/004 (1994)

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file deepquantiles-0.0.2.tar.gz.

File metadata

- Download URL: deepquantiles-0.0.2.tar.gz

- Upload date:

- Size: 9.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.13.0 pkginfo/1.5.0.1 requests/2.21.0 setuptools/40.8.0 requests-toolbelt/0.9.1 tqdm/4.31.1 CPython/3.6.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 91023eec0c9745f34b0fa558b4090aceac4fbe3454836f7c3d45e55ae0dbb0fa |

|

| MD5 | 49a0bd35b040d95e485ff8ac1267945d |

|

| BLAKE2b-256 | e17349fb5e90c7176393690020fb2a3d9b4b852ea451ff850fa49855c00ae8e0 |

File details

Details for the file deepquantiles-0.0.2-py2.py3-none-any.whl.

File metadata

- Download URL: deepquantiles-0.0.2-py2.py3-none-any.whl

- Upload date:

- Size: 12.0 kB

- Tags: Python 2, Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.13.0 pkginfo/1.5.0.1 requests/2.21.0 setuptools/40.8.0 requests-toolbelt/0.9.1 tqdm/4.31.1 CPython/3.6.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | a80aa4a121cf23477f8d235a8fcfe0ddb6ae664b21baed5b37315b5f9413aed7 |

|

| MD5 | 109ad89698838a67d1de51c37ad846fe |

|

| BLAKE2b-256 | ec44954fb3dcdde500d3b7ab7cae302c11d36179ff9d116a88d5c8178e8f38ad |