Deep Insight And Neural Network Analysis

Project description

Deep Insight And Neural Network Analysis

DIANNA is a Python package that brings explainable AI (XAI) to your research project. It wraps carefully selected XAI methods in a simple, uniform interface. It's built by, with and for (academic) researchers and research software engineers working on machine learning projects.

Why DIANNA?

DIANNA software is addressing needs of both (X)AI researchers and mostly the various domains scientists who are using or will use AI models for their research without being experts in (X)AI. DIANNA is future-proof: one of the very few XAI library supporting the Open Neural Network Exchange (ONNX) format.

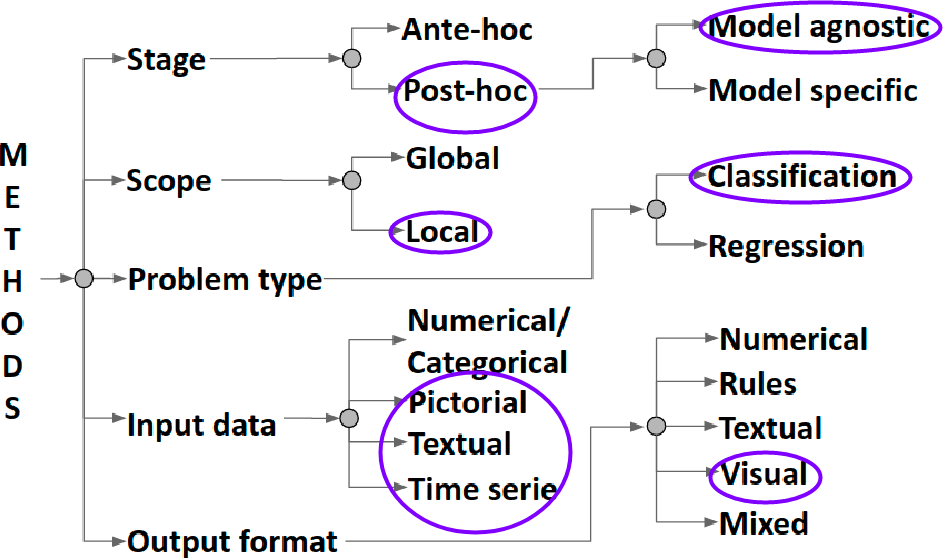

After studying the vast XAI landscape we have made choices in the parts of the XAI Taxonomy on which methods, data modalities and problems types to focus. Our choices, based on the largest usage in scientific literature, are shown graphically in the XAI taxonomy below:

The key points of DIANNA:

- Provides an easy-to-use interface for non (X)AI experts

- Implements well-known XAI methods (LIME, RISE and Kernal SHAP) chosen by systematic and objective evaluation criteria

- Supports the de-facto standard format for neural network models - ONNX.

- Includes clear instructions for export/conversions from Tensorflow, Pytorch, Keras and scikit-learn to ONNX.

- Supports images, text and time series data modalities. Tabular data and even embeddings support is planned.

- Comes with simple intuitive image and text benchmarks

- Easily extendable to other XAI methods

For more information on the unique strengths of DIANNA with comparison to other tools, please see the context landscape.

Installation

DIANNA can be installed from PyPI using pip on any of the supported Python versions (see badge):

python3 -m pip install dianna

To install the most recent development version directly from the GitHub repository run:

python3 -m pip install git+https://github.com/dianna-ai/dianna.git

If you get an error related to OpenMP when importing dianna, have a look at this issue for possible workarounds.

Pre-requisites only for Macbook Pro with M1 Pro chip users

- To install TensorFlow you can follow this tutorial.

- To install TensorFlow Addons you can follow these steps. For further reading see this issue. Note that this temporary solution works only for macOS versions >= 12.0. Note that this step may have changed already, see https://github.com/dianna-ai/dianna/issues/245.

- Before installing DIANNA, comment

tensorflowrequirement insetup.cfgfile (tensorflow package for M1 is calledtensorflow-macos).

Getting started

You need:

- your trained ONNX model (convert my pytorch/tensorflow/keras/scikit-learn model to ONNX)

- 1 data item to be explained

You get:

- a relevance map overlayed over the data item

In the library's documentation, the general usage is explained in How to use DIANNA

Demo movie

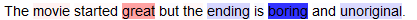

Text example:

model_path = 'your_model.onnx' # model trained on text

text = 'The movie started great but the ending is boring and unoriginal.'

Which of your model's classes do you want an explanation for?

labels = [positive_class, negative_class]

Run using the XAI method of your choice, for example LIME:

explanation = dianna.explain_text(model_path, text, 'LIME')

dianna.visualization.highlight_text(explanation[labels.index(positive_class)], text)

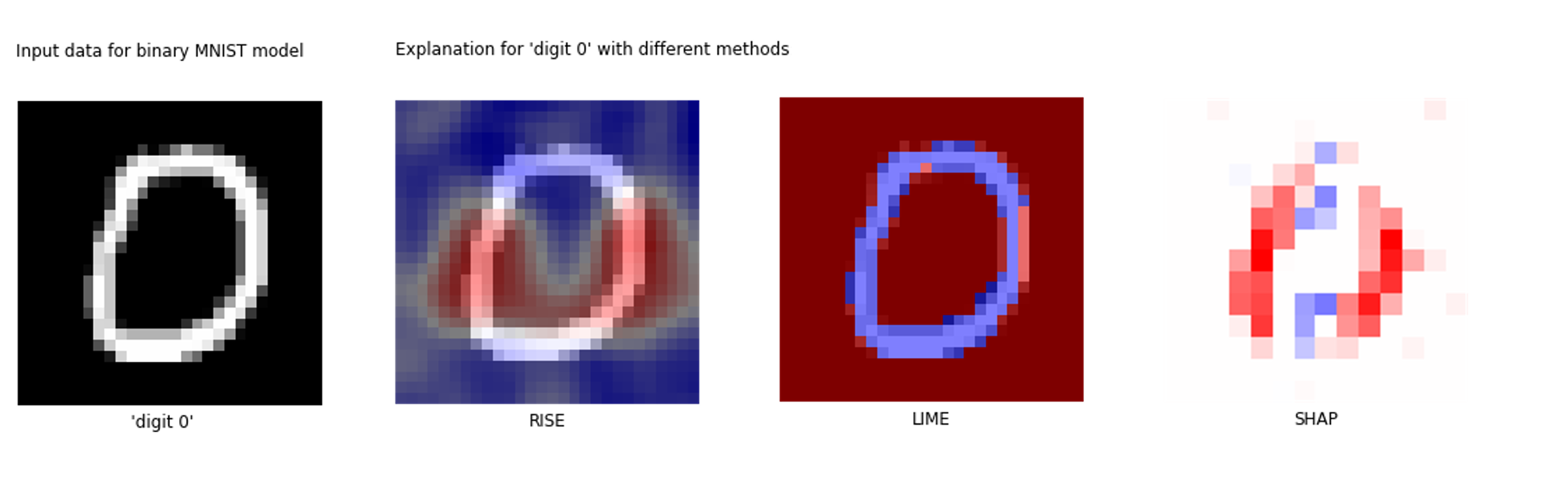

Image example:

model_path = 'your_model.onnx' # model trained on images

image = PIL.Image.open('your_image.jpeg')

Tell us what label refers to the channels, or colors, in the image.

axis_labels = {0: 'channels'}

Which of your model's classes do you want an explanation for?

labels = [class_a, class_b]

Run using the XAI method of your choice, for example RISE:

explanation = dianna.explain_image(model_path, image, 'RISE', axis_labels=axis_labels, labels=labels)

dianna.visualization.plot_image(explanation[labels.index(class_a)], original_data=image)

Time-series example:

model_path = 'your_model.onnx' # model trained on images

timeseries_instance = pd.read_csv('your_data_instance.csv').astype(float)

num_features = len(timeseries_instance) # The number of features to include in the explanation.

num_samples = 500 # The number of samples to generate for the LIME explainer.

Which of your model's classes do you want an explanation for?

class_names= [class_a, class_b] # String representation of the different classes of interest

labels = np.argsort(class_names) # Numerical representation of the different classes of interest for the model

Run using the XAI method of your choice, for example LIME with the following additional arguments:

explanation = dianna.explain_timeseries(model_path, timeseries_data=timeseries_instance , method='LIME',

labels=labels, class_names=class_names, num_features=num_features,

num_samples=num_samples, distance_method='cosine')

For visualization of the heatmap please refer to the tutorial

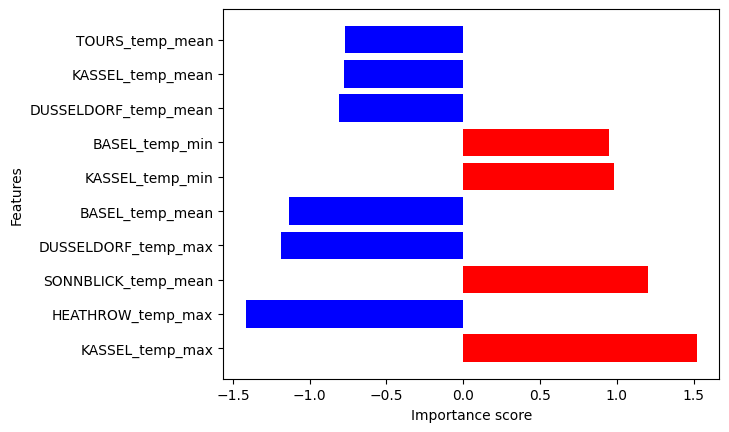

Tabular example:

model_path = 'your_model.onnx' # model trained on tabular data

tabular_instance = pd.read_csv('your_data_instance.csv')

Run using the XAI method of your choice. Note that you need to specify the mode, either regression or classification. This case, for instance a regression task using KernelSHAP with the following additional arguments:

explanation = dianna.explain_tabular(run_model, input_tabular=data_instance, method='kernelshap',

mode ='regression', training_data = X_train,

training_data_kmeans = 5, feature_names=input_features.columns)

plot_tabular(explanation, X_test.columns, num_features=10) # display 10 most salient features

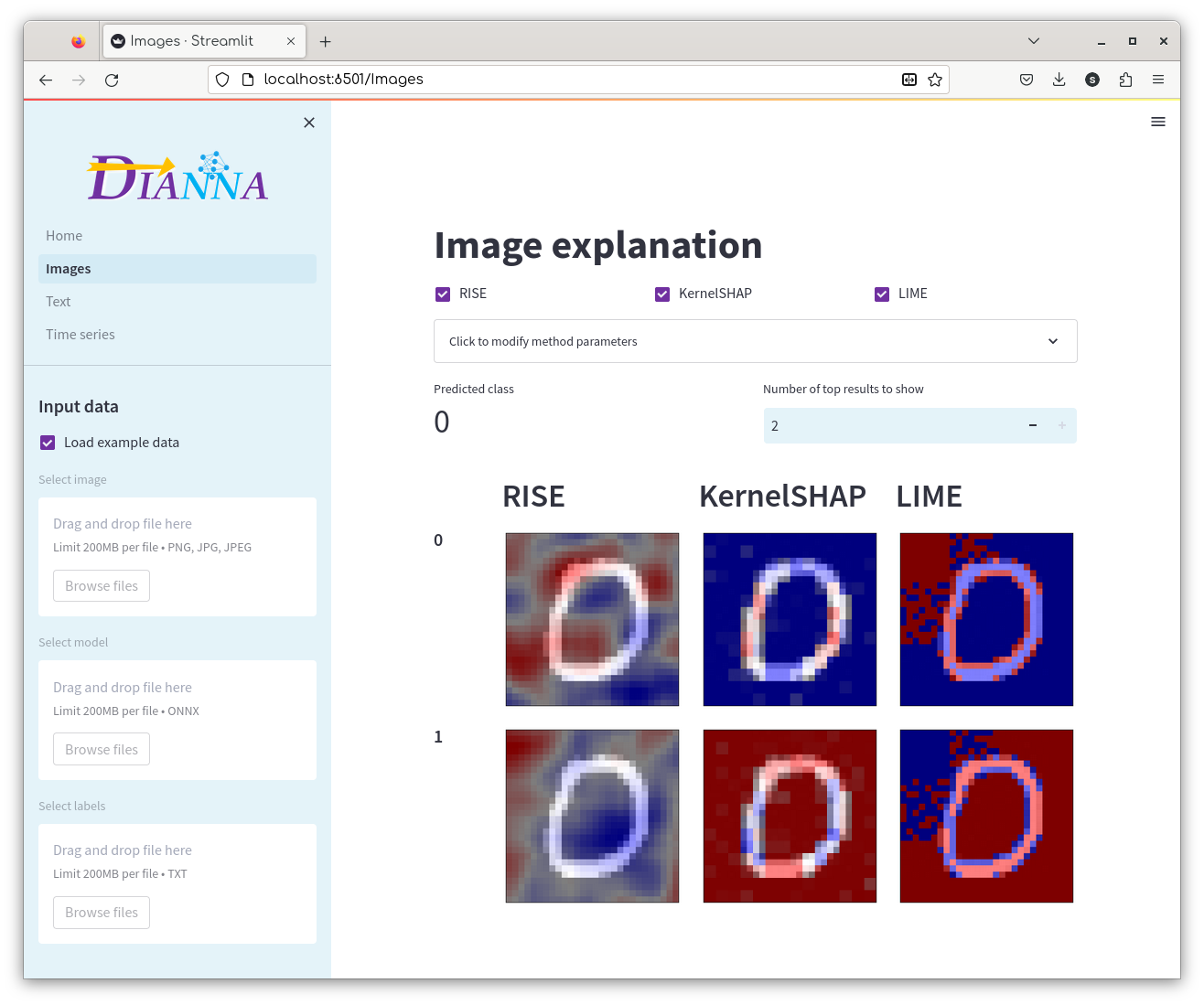

Dashboard

Explore your trained model explained using the DIANNA dashboard. Click here for more information.

Datasets

DIANNA comes with simple datasets. Their main goal is to provide intuitive insight into the working of the XAI methods. They can be used as benchmarks for evaluation and comparison of existing and new XAI methods.

Images

| Dataset | Description | Examples | Generation |

|---|---|---|---|

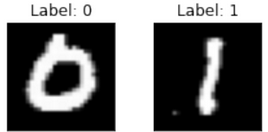

| Binary MNIST |

Greyscale images of the digits "1" and "0" - a 2-class subset from the famousMNIST dataset for handwritten digit classification. |  |

Binary MNIST dataset generation |

| Simple Geometric (circles and triangles) |

Images of circles and triangles for 2-class geometric shape classificaiton. The shapes of varying size and orientation and the background have varying uniform gray levels. |  |

Simple geometric shapes dataset generation |

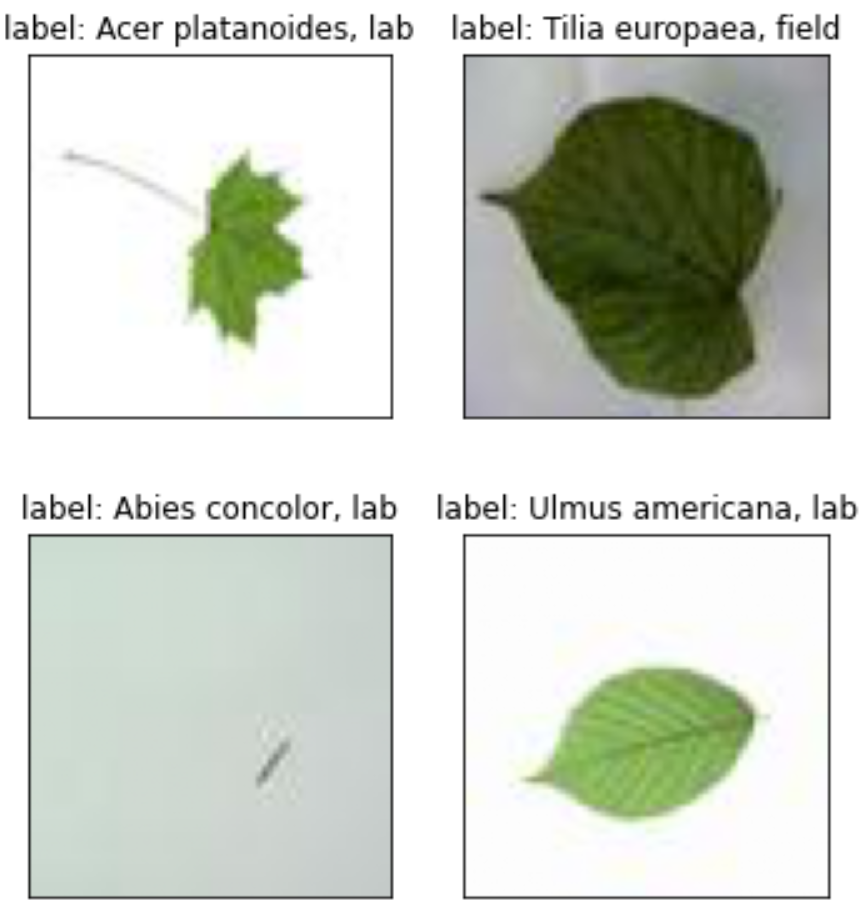

| Simple Scientific (LeafSnap30) |

Color images of tree leaves - a 30-class post-processed subset from the LeafSnap dataset for automatic identification of North American tree species. |  |

LeafSnap30 dataset generation |

Text

| Dataset | Description | Examples | Generation |

|---|---|---|---|

| Stanford sentiment treebank |

Dataset for predicting the sentiment, positive or negative, of movie reviews. | This movie was actually neither that funny, nor super witty. | Sentiment treebank |

Time series

| Dataset | Description | Examples | Generation |

|---|---|---|---|

| Coffee dataset |

Food spectographs time series dataset for a two class problem to distinguish between Robusta and Arabica coffee beans. |  |

data source |

| Weather dataset |

The light version of the weather prediciton dataset, which contains daily observations (89 features) for 11 European locations through the years 2000 to 2010. |  |

data source |

Tabular

| Dataset | Description | Examples | Generation |

|---|---|---|---|

Pengiun dataset  |

Palmer Archipelago (Antarctica) penguin dataset is a great intro dataset for data exploration & visualization similar to the famous Iris dataset. |  |

data source |

| Weather dataset |

The light version of the weather prediciton dataset, which contains daily observations (89 features) for 11 European locations through the years 2000 to 2010. |  |

data source |

ONNX models

We work with ONNX! ONNX is a great unified neural network standard which can be used to boost reproducible science. Using ONNX for your model also gives you a boost in performance! In case your models are still in another popular DNN (deep neural network) format, here are some simple recipes to convert them:

- pytorch and pytorch-lightning - use the built-in

torch.onnx.exportfunction to convert pytorch models to onnx, or call the built-into_onnxfunction on yourLightningModuleto export pytorch-lightning models to onnx. - tensorflow - use the

tf2onnxpackage to convert tensorflow models to onnx. - keras - same as the conversion from tensorflow to onnx, the

tf2onnxpackage also supports keras. - scikit-learn - use the

skl2onnxpackage to scikit-learn models to onnx.

More converters with examples and tutorials can be found on the ONNX tutorial page.

And here are links to notebooks showing how we created our models on the benchmark datasets:

Images

| Models | Generation |

|---|---|

| Binary MNIST model | Binary MNIST model generation |

| Simple Geometric model | Simple geometric shapes model generation |

| Simple Scientific model | LeafSnap30 model generation |

Text

| Models | Generation |

|---|---|

| Movie reviews model | Stanford sentiment treebank model generation |

Time series

| Models | Generation |

|---|---|

| Coffee model | Coffee model generation |

| Season prediction model | Season prediction model generation |

Tabular

| Models | Generation |

|---|---|

| Penguin model (classification) | Penguin model generation |

| Sunshine hours prediction model (regression) | Sunshine hours prediction model generation |

We envision the birth of the ONNX Scientific models zoo soon...

Tutorials

DIANNA supports different data modalities and XAI methods. The table contains links to the relevant XAI method's papers (for some explanatory videos on the methods, please see tutorials). The DIANNA tutorials cover each supported method and data modality on a least one dataset. Our future plans to expand DIANNA with more data modalities and XAI methods are given in the ROADMAP.

| Data \ XAI | RISE | LIME | KernelSHAP |

|---|---|---|---|

| Images | ✅ | ✅ | ✅ |

| Text | ✅ | ✅ | |

| Timeseries | ✅ | ✅ | |

| Tabular | planned | ✅ | planned |

| Embedding | planned | planned | planned |

| Graphs* | work in progress | work in progress | work in progress |

LRP and PatternAttribution also feature in the top 5 of our thoroughly evaluated XAI methods using objective criteria (details in coming blog-post). Contributing by adding these and more (new) post-hoc explainability methods on ONNX models is very welcome!

Reference documentation

For detailed information on using specific DIANNA functions, please visit the documentation page hosted at Readthedocs.

Contributing

If you want to contribute to the development of DIANNA, have a look at the contribution guidelines. See our developer documentation for information on developer installation, running tests, generating documentation, versioning and making a release.

How to cite us

If you use this package for your scientific work, please consider citing directly the software as:

Ranguelova, E., Bos, P., Liu, Y., Meijer, C., Oostrum, L., Crocioni, G., Ootes, L., Chandramouli, P., Jansen, A., Smeets, S. (2023). dianna (*[VERSION YOU USED]*). Zenodo. https://zenodo.org/record/5592606

or the JOSS paper as:

Ranguelova et al., (2022). DIANNA: Deep Insight And Neural Network Analysis. Journal of Open Source Software, 7(80), 4493, https://doi.org/10.21105/joss.04493

See also the Zenodo page or the JOSS page for exporting the software citation to BibTteX and other formats.

Credits

This package was created with Cookiecutter and the NLeSC/python-template.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file dianna-1.3.0.tar.gz.

File metadata

- Download URL: dianna-1.3.0.tar.gz

- Upload date:

- Size: 23.9 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | eba3270b0c155fbda527e85645f8b81e33e8ce921bff3798aad58d68343fad07 |

|

| MD5 | eb662841717372fb432f0217801c0d94 |

|

| BLAKE2b-256 | cd04cab28e4bb41bd1a87a4fe190ee0de5b2b52b8ac3d811e434e71dbf53662b |

File details

Details for the file dianna-1.3.0-py3-none-any.whl.

File metadata

- Download URL: dianna-1.3.0-py3-none-any.whl

- Upload date:

- Size: 24.0 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 6892f49b60ef5c54639b0f3de41b42f69ea8f04c3ee69062ceb72d06d11cf6d3 |

|

| MD5 | 56d68e5eda5348d2dcdbd8beb7874058 |

|

| BLAKE2b-256 | 169d168f75bdb28d1c12b66c53694fd16b2413b49ee05dfe89c7f94f57758475 |