Fairness Indicators

Project description

Fairness Indicators

Fairness Indicators is designed to support teams in evaluating, improving, and comparing models for fairness concerns in partnership with the broader Tensorflow toolkit.

The tool is currently actively used internally by many of our products, and is now available in BETA for you to try for your own use cases. We would love to partner with you to understand where Fairness Indicators is most useful, and where added functionality would be valuable. Please reach out at tfx@tensorflow.org. You can provide any feedback on your experience, and feature requests, here.

Key links

- Introductory Video

- Fairness Indicators Case Study

- Fairness Indicators Example Colab

- Fairness Indicators: Thinking about Fairness Evaluation

What is Fairness Indicators?

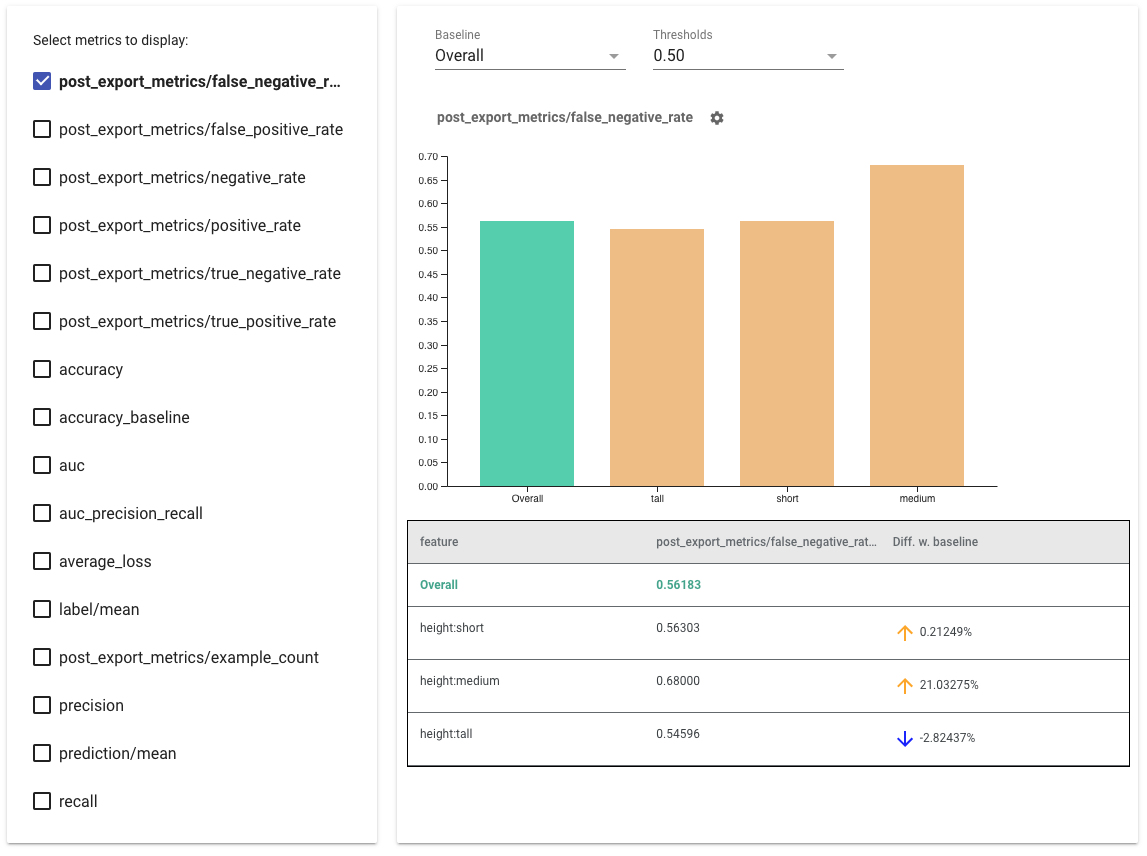

Fairness Indicators enables easy computation of commonly-identified fairness metrics for binary and multiclass classifiers.

Many existing tools for evaluating fairness concerns don’t work well on large-scale datasets and models. At Google, it is important for us to have tools that can work on billion-user systems. Fairness Indicators will allow you to evaluate fairenss metrics across any size of use case.

In particular, Fairness Indicators includes the ability to:

- Evaluate the distribution of datasets

- Evaluate model performance, sliced across defined groups of users

- Feel confident about your results with confidence intervals and evals at multiple thresholds

- Dive deep into individual slices to explore root causes and opportunities for improvement

This case study, complete with videos and programming exercises, demonstrates how Fairness Indicators can be used on one of your own products to evaluate fairness concerns over time.

The pip package download includes:

- Tensorflow Data Analysis (TFDV) [analyze distribution of your dataset]

- Tensorflow Model Analysis (TFMA) [analyze model performance]

- Fairness Indicators [an addition to TFMA that adds fairness metrics and the ability to easily compare performance across slices]

- The What-If Tool (WIT) [an interactive visual interface designed to probe your models better]

How can I use Fairness Indicators?

Tensorflow Models

- Access Fairness Indicators as part of the Evaluator component in Tensorflow Extended [docs]

- Access Fairness Indicators in Tensorboard when evaluating other real-time metrics [docs]

Not using existing Tensorflow tools? No worries!

- Download the Fairness Indicators pip package, and use Tensorflow Model Analysis as a standalone tool [docs]

Non-Tensorflow Models

- Model Agnostic TFMA enables you to compute Fairness Indicators based on the output of any model [docs]

Examples

The examples directory contains several examples.

- Fairness_Indicators_Example_Colab.ipynb gives an overview of Fairness Indicators in TensorFlow Model Analysis and how to use it with a real dataset. This notebook also goes over TensorFlow Data Validation and What-If Tool, two tools for analyzing TensorFlow models that are packaged with Fairness Indicators.

- Fairness_Indicators_on_TF_Hub.ipynb demonstrates how to use Fairness Indicators to compare models trained on different text embeddings. This notebook uses text embeddings from TensorFlow Hub, TensorFlow's library to publish, discover, and reuse model components.

- Fairness_Indicators_TensorBoard_Plugin_Example_Colab.ipynb demonstrates how to visualize Fairness Indicators in TensorBoard.

More questions?

For more information on how to think about fairness evaluation in the context of your use case, see this link.

If you have found a bug in Fairness Indicators, please file a GitHub issue with as much supporting information as you can provide.

Compatible versions

The following table shows the package versions that are compatible with each other. This is determined by our testing framework, but other untested combinations may also work.

| fairness-indicators | tensorflow | tensorflow-data-validation | tensorflow-model-analysis |

|---|---|---|---|

| GitHub master | nightly (1.x/2.x) | 0.23.0 | 0.23.0 |

| v0.23.0 | 1.15.2 / 2.3 | 0.23.0 | 0.23.0 |

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distribution

File details

Details for the file fairness_indicators-0.23.1-py3-none-any.whl.

File metadata

- Download URL: fairness_indicators-0.23.1-py3-none-any.whl

- Upload date:

- Size: 30.9 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.2.0 pkginfo/1.5.0.1 requests/2.24.0 setuptools/49.6.0 requests-toolbelt/0.9.1 tqdm/4.48.2 CPython/3.7.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 2417fc2c1aac8b5353edf5248affa02f7b004bdbd8560a5d500ab0ce6d1b6971 |

|

| MD5 | c3773e8b37725142661b0ccf350c9e12 |

|

| BLAKE2b-256 | 8f8df5535c25672d979df626cc7578598e1d3dffe5f3255f50cec252cce5252d |