Distributed Dataframes for Multimodal Data

Project description

Website • Docs • Installation • 10-minute tour of Daft • Community and Support

Daft: Unified Engine for Data Analytics, Engineering & ML/AI

Daft is a distributed query engine for large-scale data processing using Python or SQL, implemented in Rust.

Familiar interactive API: Lazy Python Dataframe for rapid and interactive iteration, or SQL for analytical queries

Focus on the what: Powerful Query Optimizer that rewrites queries to be as efficient as possible

Data Catalog integrations: Full integration with data catalogs such as Apache Iceberg

Rich multimodal type-system: Supports multimodal types such as Images, URLs, Tensors and more

Seamless Interchange: Built on the Apache Arrow In-Memory Format

Built for the cloud: Record-setting I/O performance for integrations with S3 cloud storage

Table of Contents

About Daft

Daft was designed with the following principles in mind:

Any Data: Beyond the usual strings/numbers/dates, Daft columns can also hold complex or nested multimodal data such as Images, Embeddings and Python objects efficiently with it’s Arrow based memory representation. Ingestion and basic transformations of multimodal data is extremely easy and performant in Daft.

Interactive Computing: Daft is built for the interactive developer experience through notebooks or REPLs - intelligent caching/query optimizations accelerates your experimentation and data exploration.

Distributed Computing: Some workloads can quickly outgrow your local laptop’s computational resources - Daft integrates natively with Ray for running dataframes on large clusters of machines with thousands of CPUs/GPUs.

Getting Started

Installation

Install Daft with pip install getdaft.

For more advanced installations (e.g. installing from source or with extra dependencies such as Ray and AWS utilities), please see our Installation Guide

Quickstart

Check out our 10-minute quickstart!

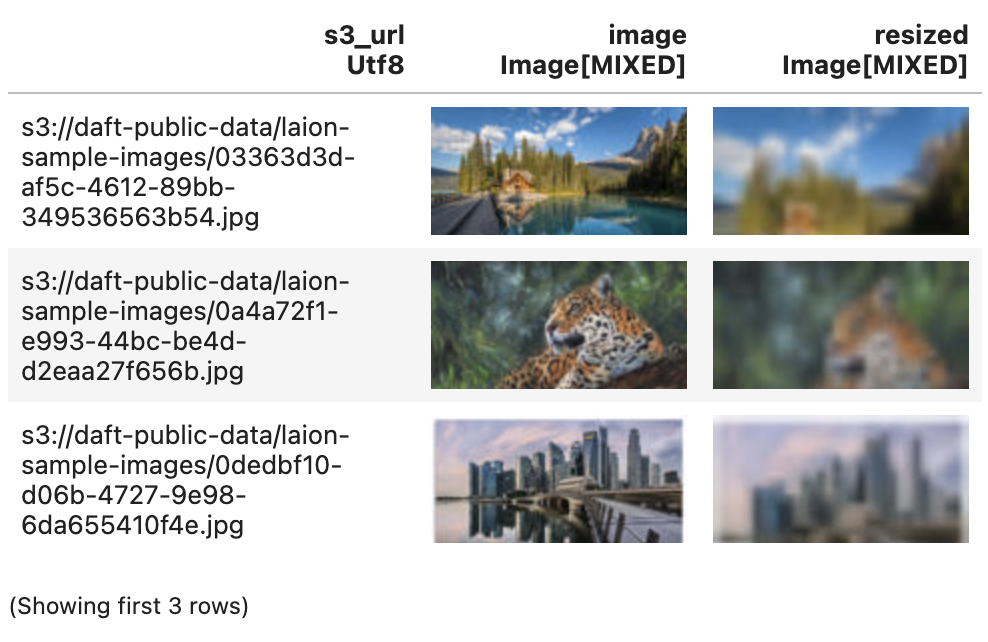

In this example, we load images from an AWS S3 bucket’s URLs and resize each image in the dataframe:

import daft

# Load a dataframe from filepaths in an S3 bucket

df = daft.from_glob_path("s3://daft-public-data/laion-sample-images/*")

# 1. Download column of image URLs as a column of bytes

# 2. Decode the column of bytes into a column of images

df = df.with_column("image", df["path"].url.download().image.decode())

# Resize each image into 32x32

df = df.with_column("resized", df["image"].image.resize(32, 32))

df.show(3)Benchmarks

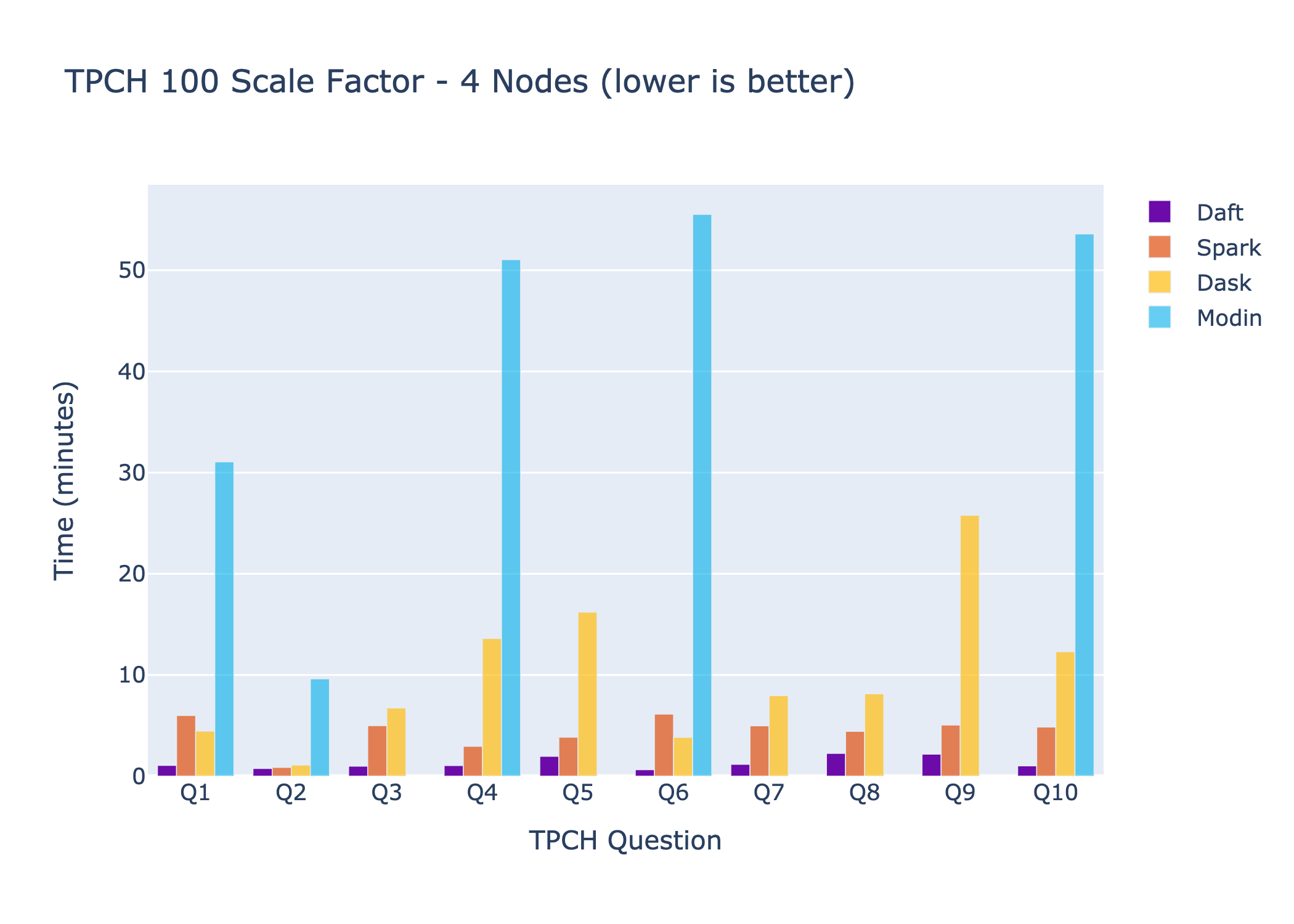

To see the full benchmarks, detailed setup, and logs, check out our benchmarking page.

More Resources

10-minute tour of Daft - learn more about Daft’s full range of capabilities including dataloading from URLs, joins, user-defined functions (UDF), groupby, aggregations and more.

User Guide - take a deep-dive into each topic within Daft

API Reference - API reference for public classes/functions of Daft

Contributing

To start contributing to Daft, please read CONTRIBUTING.md

Here’s a list of good first issues to get yourself warmed up with Daft. Comment in the issue to pick it up, and feel free to ask any questions!

Telemetry

To help improve Daft, we collect non-identifiable data.

To disable this behavior, set the following environment variable: DAFT_ANALYTICS_ENABLED=0

The data that we collect is:

Non-identifiable: events are keyed by a session ID which is generated on import of Daft

Metadata-only: we do not collect any of our users’ proprietary code or data

For development only: we do not buy or sell any user data

Please see our documentation for more details.

License

Daft has an Apache 2.0 license - please see the LICENSE file.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distributions

File details

Details for the file getdaft-0.3.8.tar.gz.

File metadata

- Download URL: getdaft-0.3.8.tar.gz

- Upload date:

- Size: 3.7 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 6a43235549125f856b388707acb1042781d8078a379b974fcf843e47ecaf713c |

|

| MD5 | 59a6dfd2b8ad34bbf70b86743e5d6653 |

|

| BLAKE2b-256 | a4f0fc8dc3ff6934d7001134cac01d27bacb541617025273f14bbfb7805621db |

File details

Details for the file getdaft-0.3.8-cp38-abi3-win_amd64.whl.

File metadata

- Download URL: getdaft-0.3.8-cp38-abi3-win_amd64.whl

- Upload date:

- Size: 26.6 MB

- Tags: CPython 3.8+, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 127d8434c2d951d7ade329847c67acb4f9c635de3115e65dea36000ab9dc704e |

|

| MD5 | 3c1df1aec27b1363cc20c06ef270d4b0 |

|

| BLAKE2b-256 | d41b144b37641269b43efd057d5024303dac87776bc5691c5012ef7615741ccd |

File details

Details for the file getdaft-0.3.8-cp38-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: getdaft-0.3.8-cp38-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 29.6 MB

- Tags: CPython 3.8+, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 9aa863c41412ecbf0e657cc05e2d94602c0b33c8cd2a234043312bea7e0578e0 |

|

| MD5 | eebf5ecd372c70f0fc709094c8fa5033 |

|

| BLAKE2b-256 | 58de35a5a5024c0f0ec9021bda959d91e69089dad860217a3b70738bbdfeb230 |

File details

Details for the file getdaft-0.3.8-cp38-abi3-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.

File metadata

- Download URL: getdaft-0.3.8-cp38-abi3-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

- Upload date:

- Size: 28.1 MB

- Tags: CPython 3.8+, manylinux: glibc 2.17+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | bf36435df2a42eac7fb9415558112109800d96c67eedc64a44a2634d48a02e91 |

|

| MD5 | 0f214fe6e6e902dd0f7792de3c743fcd |

|

| BLAKE2b-256 | 2c68a74e7dd5bd2e9d60106e8fe2b9ceb9cb4511a778b50ada505f2cc84b7e7f |

File details

Details for the file getdaft-0.3.8-cp38-abi3-macosx_11_0_arm64.whl.

File metadata

- Download URL: getdaft-0.3.8-cp38-abi3-macosx_11_0_arm64.whl

- Upload date:

- Size: 24.5 MB

- Tags: CPython 3.8+, macOS 11.0+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | e2bbf1b604c2d5f004f5a734acca3d3a7e7d36b29b0a447be4d99bd99f10361e |

|

| MD5 | 160f755354eaafc32c72d70e22adb625 |

|

| BLAKE2b-256 | 61b33474cac7346c6915d3cde49bb7374717a64e12acdde0b01bda75380af328 |

File details

Details for the file getdaft-0.3.8-cp38-abi3-macosx_10_12_x86_64.whl.

File metadata

- Download URL: getdaft-0.3.8-cp38-abi3-macosx_10_12_x86_64.whl

- Upload date:

- Size: 26.6 MB

- Tags: CPython 3.8+, macOS 10.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.11.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ae40cbbfdc6b33f5dd80c9b9890890bcd837ccfeb178098abff6270c824aaf98 |

|

| MD5 | a7f809230ee43e8b49eb1ba597c47ca0 |

|

| BLAKE2b-256 | 56fa1ce504f9f88b955f56f32be639d4a950cb68f4b2a2020b317d7118d0ce23 |